Research - ITS

Detection and Class Recognition | Urban Image | Virtual City | Localization | MR Traffic | Sensing Vehicle

Detection and Class Recognition of Street Parking Vehicles Using Image and Laser Range Sensors

We propose a robust method for detecting street-parking vehicles and classifying them into 5 classes, namely Sedan, Wagon, Hatchback, Minivan and others. First the system uses laser range data to detect the vehicles. In our previous work, an algorithm to detect and count the number of vehicles from laser range data has been already proposed. We apply this result to image data to segment the vehicles from the background. Next the vehicle classes are recognized using vector quantization method, which is a modification of Eigenwindow method we have proposed earlier. The system showeda high accuracy, even when the vehicles are partially occluded by obstacles, or shadows are cast over them.

We propose a robust method for detecting street-parking vehicles and classifying them into 5 classes, namely Sedan, Wagon, Hatchback, Minivan and others. First the system uses laser range data to detect the vehicles. In our previous work, an algorithm to detect and count the number of vehicles from laser range data has been already proposed. We apply this result to image data to segment the vehicles from the background. Next the vehicle classes are recognized using vector quantization method, which is a modification of Eigenwindow method we have proposed earlier. The system showeda high accuracy, even when the vehicles are partially occluded by obstacles, or shadows are cast over them.

Publications:

- S. Mohottala, M. Kagesawa, K. Ikeuchi, "A Probabilistic Method for Vehicle Detection and Class Recognition," The IEICE Transactions on Information and Systems, J89-D 4, pp.816-825, Apr. 2006.

Alignment of Actual Urban Image and Map using Temporal Height Image

Our goal is to align buildings between urban actual image and digital map and estimate relative location of vehicle from buildings. To extract buildings from urban actual image stably, we propose a novel expression of space-time volume, called gTemporal Height Image (THI)h. Assigning a gray value in proportion as height to all the objects in the space-time volume, we can obtain THI by looking the volume from above. The THI has similar concept as EPI (Epipolar Plane Image), whereas, THI can overcome the shortages of EPI, i.e. edges inside the building disrupt result. After removing electric wires by our novel method, we can divide sky-region and building-region stably. Then, we can make THIs from urban actual image and digital map, and align buildings between the two THIs by DP-matching.

Our goal is to align buildings between urban actual image and digital map and estimate relative location of vehicle from buildings. To extract buildings from urban actual image stably, we propose a novel expression of space-time volume, called gTemporal Height Image (THI)h. Assigning a gray value in proportion as height to all the objects in the space-time volume, we can obtain THI by looking the volume from above. The THI has similar concept as EPI (Epipolar Plane Image), whereas, THI can overcome the shortages of EPI, i.e. edges inside the building disrupt result. After removing electric wires by our novel method, we can divide sky-region and building-region stably. Then, we can make THIs from urban actual image and digital map, and align buildings between the two THIs by DP-matching.

Publications:

- J. Wang, S. Ono, K. Ikeuchi, eeTemporal Height Image and Alignment of Actual Urban Image and Map by THI,ff Meeting on Image Recognition and Understanding (MIRU), Jul. 2008 (to appear).

Eigen Space Compression and Real-Time Rendering Techniques for Virtual City Modeling

We are developing a compression method based on eigen image technique for the sequence of omnidirectional images and a real-time rendering technique for IBR (Image Based Rendering). Since the omnidirectional images (ex Fig.1) contain redundant data because the same object is captured from multiple view directions, efficient compression can be achieved by considering an eigen space. In actual implementation, first we rectify images as shown in Fig. 2, divide those images into n*m block units, and apply temporal tracking for each block images. The tracking is done in two steps: global tracking by using EPI (space-time image analysis), and local tracking by using block matching, which lead high similarity among the block sets. Then, the obtained block image sets are efficiently compressed by KL expansion (principal component analysis). The scene rendering is achieved by restoring all n*m block images, which is calculated by product-sum operation of eigen images and the weight coefficient. The weight coefficient is a parameter determined by the angle of the viewpoint and rendering plane. Since this operation is all linear, we run it in a fragmentshader of a graphics card to realize real-time rendering. Fig. 5 is the result of rendering.

We are developing a compression method based on eigen image technique for the sequence of omnidirectional images and a real-time rendering technique for IBR (Image Based Rendering). Since the omnidirectional images (ex Fig.1) contain redundant data because the same object is captured from multiple view directions, efficient compression can be achieved by considering an eigen space. In actual implementation, first we rectify images as shown in Fig. 2, divide those images into n*m block units, and apply temporal tracking for each block images. The tracking is done in two steps: global tracking by using EPI (space-time image analysis), and local tracking by using block matching, which lead high similarity among the block sets. Then, the obtained block image sets are efficiently compressed by KL expansion (principal component analysis). The scene rendering is achieved by restoring all n*m block images, which is calculated by product-sum operation of eigen images and the weight coefficient. The weight coefficient is a parameter determined by the angle of the viewpoint and rendering plane. Since this operation is all linear, we run it in a fragmentshader of a graphics card to realize real-time rendering. Fig. 5 is the result of rendering.

Publications:

- R. Sato, T. Mikami, H. Kawasaki, S. Ono, K. Ikeuchi, gReal-time Image Based Rendering Technique and Efficient Data Compression Method for Virtual City Modelingh (in Japanese), Meeting on Image Recognition and Understanding (MIRU), 2007.

- R. Sato, J. Oike, H. Kawasaki, S. Ono and K. Ikeuchi, gEigen Space Compression for In-vehicle Camera Image and Realization of the Realistic Driving Simulator by the Real-time Reconstruction by GPUh (in Japanese), 6th ITS Symposium, pp. 125-130, 2007.

Localization Algorithm for Omni-directional Images by Using Image Sequence

We are developing high-accuracy and robust Structure From Motion (SFM) method especially for omni-directional image sequences. SFM is widely known as a method for estimating 3D geometry of a scene and the position and the pose of the camera simultaneously from image sequences, with pixel-order accuracy. However, it often works unstably depending on initial parameters and noise. Our approach first divides the omni-directional images into several partial images. Positions of the parts to be divided from the image is varied in time, giving an effect of virtual camera rotation. Each devided image is projected to a perspective view, from which feature points are detected, and applied factorization method (one of SFM) to get initial solution. Secondly, the calculated 3D geometry of the scene is re-projected onto the input images, and bundle adjustment is applied between feature points and re-projected points to modify the parameters in factorization method, which affects improved accuracy.

We are developing high-accuracy and robust Structure From Motion (SFM) method especially for omni-directional image sequences. SFM is widely known as a method for estimating 3D geometry of a scene and the position and the pose of the camera simultaneously from image sequences, with pixel-order accuracy. However, it often works unstably depending on initial parameters and noise. Our approach first divides the omni-directional images into several partial images. Positions of the parts to be divided from the image is varied in time, giving an effect of virtual camera rotation. Each devided image is projected to a perspective view, from which feature points are detected, and applied factorization method (one of SFM) to get initial solution. Secondly, the calculated 3D geometry of the scene is re-projected onto the input images, and bundle adjustment is applied between feature points and re-projected points to modify the parameters in factorization method, which affects improved accuracy.

Publications:

- Ryota Matsuhisa, Hiroshi Kawasaki, Shintaro Ono, Atsuhiko Banno, Katsushi Ikeuchi, gLocalization Algorithm for Omni-directionalImages by Using Image Sequence,h Proceedings of the 2008 IEICE General Conference, D-12-82, pp.213, March 2008.

Mixed-Reality Traffic Experiment Space: - An Enhanced Traffic and Driving Simulation System -

Mixed-Reality Traffic Experiment Space is an integrated ITS simulation system developed through interfaculty collaboration in ITS Center, composed of traffic simulator (TS), driving simulator (DS), and the latest visualizing technologies (IMG). Users can experience novel simulation in realistic environments: interactions between self and surrounding vehicles such as passing are well re-created for simulating traffic congestions, and the driving view is rendered with high photo-reality. Regarding visualizing technologies, we introduce image-based rendering for far areas including sky and buildings using real images captured in advance by our sensing vehicle, instead of conventional fully modelbased CG which leads to less reality and huge human cost. This year we improved visual quality by removing electric wires, developed data compression technology for long duration based on eigen textures, and enhanced system portability for evolving the system for regional use. During Dec. 7-10, the system was exhibited to Fukuoka Motor Show 2007.

Mixed-Reality Traffic Experiment Space is an integrated ITS simulation system developed through interfaculty collaboration in ITS Center, composed of traffic simulator (TS), driving simulator (DS), and the latest visualizing technologies (IMG). Users can experience novel simulation in realistic environments: interactions between self and surrounding vehicles such as passing are well re-created for simulating traffic congestions, and the driving view is rendered with high photo-reality. Regarding visualizing technologies, we introduce image-based rendering for far areas including sky and buildings using real images captured in advance by our sensing vehicle, instead of conventional fully modelbased CG which leads to less reality and huge human cost. This year we improved visual quality by removing electric wires, developed data compression technology for long duration based on eigen textures, and enhanced system portability for evolving the system for regional use. During Dec. 7-10, the system was exhibited to Fukuoka Motor Show 2007.

Publications:

- S. Ono, R. Sato, H. Kawasaki, K. Ikeuchi, "Image-Based Rendering of Urban Road Scene for Real-time Driving Simulation," ASIAGRAPH, Jun. 2008 (to appear).

Collaborative Development of Next-Generation ITS Sensing Vehicle

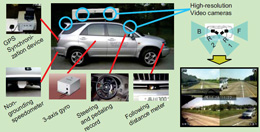

In recent ITS, quality levels required in analysis of drivers and driving environment, traffic flow analysis, and reconstruction/representation of virtual city models for navigation and driving simulation have been becoming more higher, meanwhile, technologies for acquiring and processing real-world information required for such issues are still not well-established. We aim to develop methods to acquire and process real-world information such as geometric/photometric information of constructs, behavior of self/peripheral vehicles, and behavior of drivers in traffic scenes through designing a special sensing vehicle, and to apply these accomplishments to construction of digital maps and improvement of driving simulation. In the 1st year, first version of the vehicles, ARGUS (the new one) and MAESTRO (the upgraded one, developed by Kuwahra and Akahane Lab) were accomplished. In the 2nd year, technological developments are mainly being carried out, such as localization by signal processing, matching existing map and scanned data, vibration correction by image processing, construction of driving simulation by real image, etc. For instance, in [2], an existing 2D digital residential map including symbolic information such as name of shops, and fine 3D geometry of streets actually scanned by the vehicle, are matched by dynamic programming, and merge them. During Dec. 7-10, the vehicles were exhibited to Fukuoka Motor Show 2007.

In recent ITS, quality levels required in analysis of drivers and driving environment, traffic flow analysis, and reconstruction/representation of virtual city models for navigation and driving simulation have been becoming more higher, meanwhile, technologies for acquiring and processing real-world information required for such issues are still not well-established. We aim to develop methods to acquire and process real-world information such as geometric/photometric information of constructs, behavior of self/peripheral vehicles, and behavior of drivers in traffic scenes through designing a special sensing vehicle, and to apply these accomplishments to construction of digital maps and improvement of driving simulation. In the 1st year, first version of the vehicles, ARGUS (the new one) and MAESTRO (the upgraded one, developed by Kuwahra and Akahane Lab) were accomplished. In the 2nd year, technological developments are mainly being carried out, such as localization by signal processing, matching existing map and scanned data, vibration correction by image processing, construction of driving simulation by real image, etc. For instance, in [2], an existing 2D digital residential map including symbolic information such as name of shops, and fine 3D geometry of streets actually scanned by the vehicle, are matched by dynamic programming, and merge them. During Dec. 7-10, the vehicles were exhibited to Fukuoka Motor Show 2007.

Publications:

- K. Ikeuchi, M. Kuwahara, Y. Suda, T. Tanaka, T. Suzuki, S. Tanaka, D. Yamaguchi, S. Ono, gDeployment of Sustainable ITSh (in Japanese), Proc. 6th Symposium on ITS, Dec. 2007.

- L. Tong, S. Ono, M. Kagesawa, g3D Modeling of a Residential Map by Matching with Range Images of Streetsh, Proc. ITS World Congress, Oct. 2007.